Marcus Hutter proposes a definition of Universal Intelligence and offers a mathematical theory describing the optimal intelligent agent, AIXI.

Marcus Hutter is a researcher in many areas, including Universal Artificial Intelligence. The approach he and others – Schmidhuber, Hochreiter – have taken is different from the other modern and not so modern approaches to AI.

Disclosure: Some of the links below are affiliate links. This means that if you click through and make a purchase, I may earn a small commission at no extra cost to you.

A top-down mathematical theory

Hutter takes a top-down approach, he proposes a mathematical definition of intelligence and derives properties of intelligent agents via proving mathematical theorems.

Other approaches are bottom-up, they focus on defining agents that can solve problems in a narrow domain and then look for ways to make them more general so they can deal with previously unknown problems or problems in a different domain.

Defining an intelligent agent

An informal definition of intelligence proposed by Hutter & Legg is:

The ability to achieve goals in a wide range of environments.

This definition is general enough that can be applied to non-human agents.

Hutter sets out to formalize the previous definition. The result is an equation defining the optimal intelligent agent, AIXI, as follows:

\[ { \color{blue} AIXI }\quad { \color{green} a_k } := { \color{green} \arg \max_{a_k} } { \color{red} \sum_{ {\color{brightpink} o_k} {\color{purple} r_k} } } … { \color{green} \max_{a_m} } { \color{red} \sum_{ {\color{brightpink} o_m} {\color{purple} r_m} } } {\color{purple} [r_k + … + r_m]} {\color{blue} \sum_{ {\color{goldenrod} q} :U( {\color{goldenrod} q}, {\color{green} a_1 … a_m} ) = {\color{brightpink} o_1} {\color{purple} r_1} … {\color{brightpink} o_m} {\color{purple} r_m} } 2^{-\ell( {\color{goldenrod} q} )} } \]

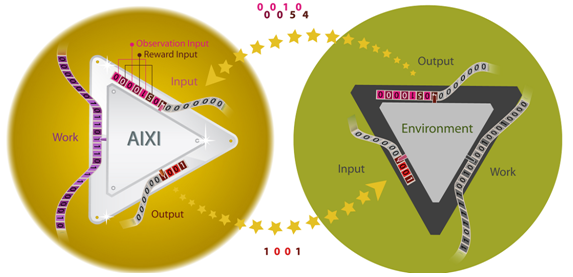

The AIXI agent learns via reinforcement, its goal, to take the action that maximizes the total future reward provided by the environment.

In order to calculate its total future reward, it averages the rewards over the interaction history with the environment.

The agent observes the environment and takes an action; the environment in turn generates an output and a reward.

The environment is unknown to AIXI, therefore, the agent has to take into consideration all possible environments. AIXI has a bias towards simplicity; the interactions with simpler environments will have a bigger contribution towards the agent’s next action.

References

- arXiv:0712.3329v1 - Shane Legg, Marcus Hutter, Universal Intelligence: A Definition of Machine Intelligence

- Universal Artificial Intelligence on Hutter’s website.

- Can Intelligence Explode? - Video lecture at the Singularity Summit Australia 2012

Related

- Marcus Hutter

- Jürgen Schmidhuber

- Josef “Sepp” Hochreiter

- Is AIXI a big deal in AGI research? @ StackExchange AI

- Agent-Environment image adapted from: Solomonoff Cartesianism